# Start Dgraph with a schema which specifies the predicates to index.ĭgraph -schema ~/work/src//dgraph-io/benchmarks/data/goldendata.schemaĭgraphloader -r ~/work/src//dgraph-io/benchmarks/data/neo4j/ : string date schema file specifies creation of an index on the two predicates. Using the following schema and the commands below. We loaded an equivalent data set into Dgraph dbms.query_cache_size=0ĭgraph does not do any query caching. You can set it by modifying the following variable in conf/nf. Generally, it does not make sense to benchmark queries with caching turned on, but we decided to set it because that’s the default behavior Neo4j users see. Once with query caching turned off, and then with query caching turned on. Then we created some indexes in Neo4j for the best query performance. neo4j-admin import -database film.db -id-type string -nodes:Film $DATA/films.csv -nodes:Genre $DATA/genres.csv -nodes:Director $DATA/directors.csv -relationships:GENRE $DATA/filmgenre.csv -relationships:FILMS $DATA/directorfilm.csv We loaded these files into Neo4j using their import tool./neo4j start After a painful process, we converted our data into five CSV files, one for each type of entity (film, director, and genre) and two for the relationships between them so that we could do some queries. But given the difficulties we faced loading large amounts of data into Neo4j, we resorted to a subset dataset of 1.3 million N-Quads containing only certain types of entities and relationships. We would have ideally liked to load up the entire 21 million RDF dataset so that we could compare the performance of both databases at scale. Is 160x faster than Neo4j for loading graph data. In fact, the Neo4j loader process never finished (we killed it after a considerable wait).

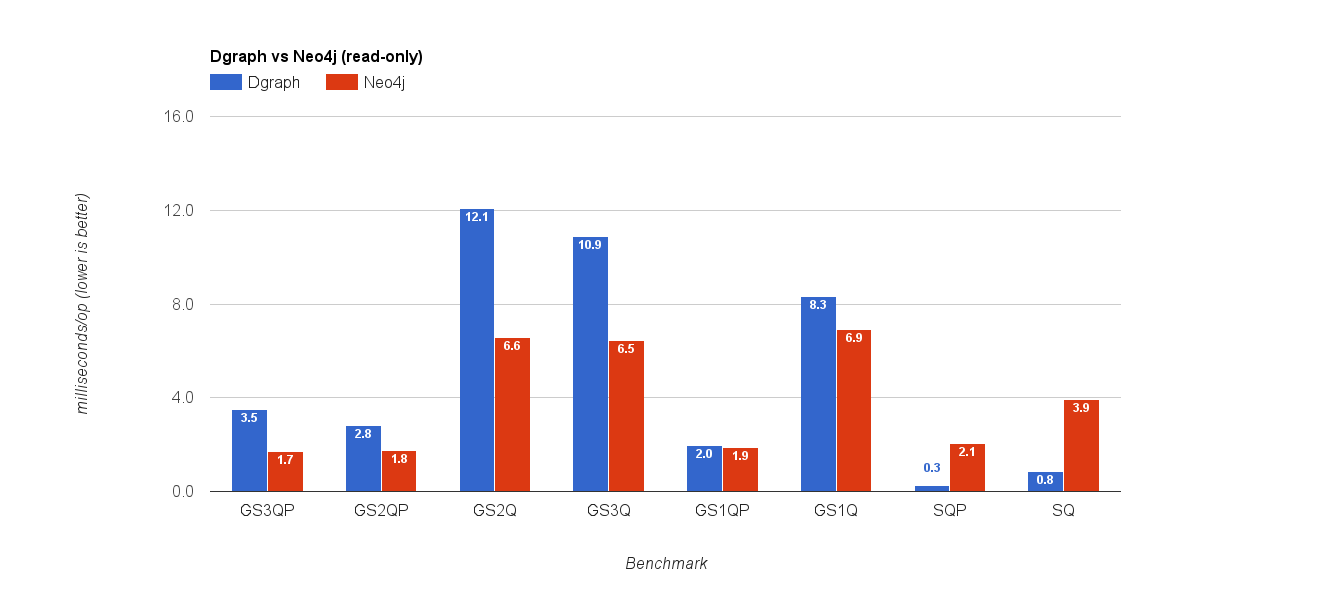

Outperformed Neo4j 46.7k to 280 N-Quads per second. With the golden data set of 1.1 million N-Quads, Dgraph For Dgraph, we typically send 1000 N-Quads per request and have 500 concurrent connections. In fact, that’s a sure way to make Neo4j data corrupt and hang the system 3.2

Note that we only used 20 concurrent connections and batched 200 N-Quads for each request because Neo4j doesn’t work well if we increase either the number of connections or N-Quads per connection beyond this. In the video below, you can see a comparison of loading 1.1 million N-Quads on Dgraph It is the fastest way we could find to load RDF data into Neo4j. This program used Bolt, a new protocol by Neo4j. We wrote a small program similar to the Dgraphloader which reads N-Quads, batches them and tries to load them concurrently into Neo4j. So, we looked into the next best option to load graph data into Neo4j. While this is okay for relational data, this doesn’t work for graph data sets, where each entity can be of multiple types, and relationships between entities are fluid. One file for each type of entity, and one file per relationship between two types of entities. If we were to try and convert it to CSV format, we would end up with 100s of CSV files. In our 21 million dataset, we have 50 distinct types of entities and 132 types of relationships between these entities. The loader for Neo4j accepts data in CSV format which is essentially what SQL tables have. The first problem we faced was that Neo4j doesn’t accept data in RDF format directly 3.1 We feel this data is highly interconnected and makes a good use case for storing in a graph database. Have been using the Freebase film data for our development and testing. We wanted to load a dense graph data set involving real world data. Dgraph from master branch (commit: 100c104a).Thinkpad T460 laptop running Ubuntu Linux, Intel Core i7, with 16 GB RAM and SSD storage.We have divided this post into five parts: Is nearing its v0.8 release, we wanted to spend some time comparing it against Neo4j, which is the most popular graph database. Neo4j vs Dgraph - The numbers speak for themselves

0 kommentar(er)

0 kommentar(er)